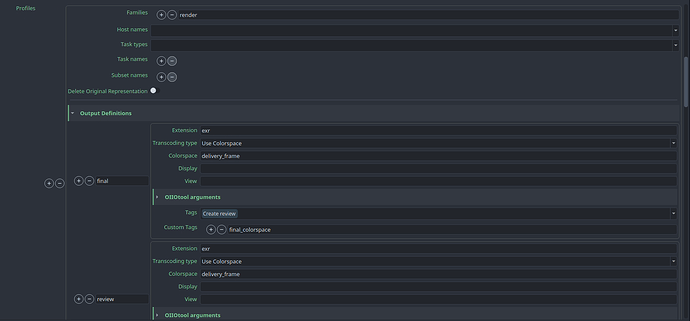

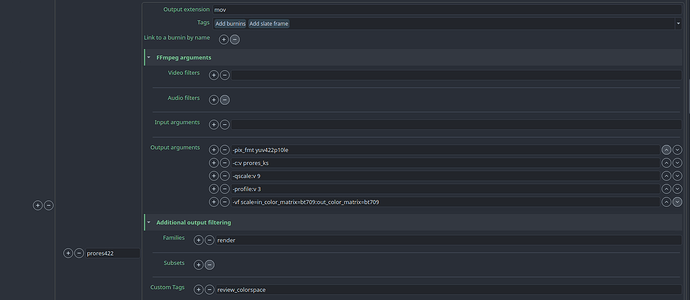

Making reviews using deadline is now three step process. The main render, the extract review data mov that makes Prores 4444 from the render, and extract review that transcodes the Prores to desired output.

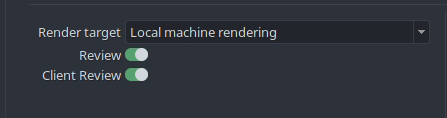

First step can be distributed to many machines, and third step if often not too slow. The second step is however running on single machine, and can easily take much more time than distributed main render.

Thinking loud about a way to speed up the process by distributing the middle step to more Deadline workers.

One way would be to use ExtractReviewDataMov to render to the file sequence instead of Prores file. Maybe that is already possible? This way, we can distribute the Nuke render to many workers.

Another way would be to render to intermediate file sequence at the same time as the main render render. Maybe even just another (one or more) write node in OpenPype render group? This will have another benefit, worker that renders the main render has all the script resources in the memory, and there would be no need for another job in the deadline job group.

Using oiiotool instead of Nuke looks to be limiting right now. Color conversion is there, but resizing and effect baking (typically CDL + LUT) is missing.

Rendering to sequence has issues. Image file sequences do not hold metadata crucial for editorial, like source timecode and reel-id. Those were stored in extractreviewdatamov by Nuke, if present in exrs. We would need a way to push source timecode from exr to reviews. Also, rendering to image sequences should have a configuration, similar to Nuke ImageIO settings, for some quality flexibility. Maybe Jpeg: quality 80 (Very fast and great for h264), or png 16bit (for “final” outputs).

Do you think changing the way Nuke bakes to mov to sequence is worth the trouble?