=======================================================

Log

=======================================================

2025-02-20 14:33:14: 0: Loading Job's Plugin timeout is Disabled

2025-02-20 14:33:14: 0: SandboxedPlugin: Render Job As User disabled, running as current user 'admin'

2025-02-20 14:33:26: 0: Executing plugin command of type 'Initialize Plugin'

2025-02-20 14:33:26: 0: INFO: Executing plugin script 'C:\ProgramData\Thinkbox\Deadline10\workers\ADCDSB-01\plugins\67b72f150229a3b988f44f71\Houdini.py'

2025-02-20 14:33:26: 0: INFO: Plugin execution sandbox using Python version 3

2025-02-20 14:33:26: 0: INFO: About: Houdini Plugin for Deadline

2025-02-20 14:33:26: 0: INFO: The job's environment will be merged with the current environment before rendering

2025-02-20 14:33:26: 0: Done executing plugin command of type 'Initialize Plugin'

2025-02-20 14:33:26: 0: Start Job timeout is disabled.

2025-02-20 14:33:26: 0: Task timeout is disabled.

2025-02-20 14:33:26: 0: Loaded job: HFT_sh01_layout_v011.hiplc - usdrenderMain [EXPORT IFD] (67b72f150229a3b988f44f71)

2025-02-20 14:33:27: 0: Executing plugin command of type 'Start Job'

2025-02-20 14:33:27: 0: DEBUG: S3BackedCache Client is not installed.

2025-02-20 14:33:27: 0: INFO: Executing global asset transfer preload script 'C:\ProgramData\Thinkbox\Deadline10\workers\ADCDSB-01\plugins\67b72f150229a3b988f44f71\GlobalAssetTransferPreLoad.py'

2025-02-20 14:33:27: 0: INFO: Looking for legacy (pre-10.0.26) AWS Portal File Transfer...

2025-02-20 14:33:27: 0: INFO: Looking for legacy (pre-10.0.26) File Transfer controller in C:/Program Files/Thinkbox/S3BackedCache/bin/task.py...

2025-02-20 14:33:27: 0: INFO: Could not find legacy (pre-10.0.26) AWS Portal File Transfer.

2025-02-20 14:33:27: 0: INFO: Legacy (pre-10.0.26) AWS Portal File Transfer is not installed on the system.

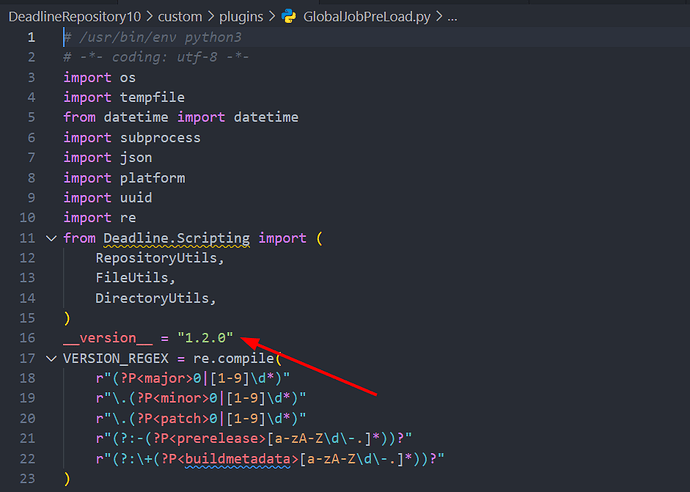

2025-02-20 14:33:27: 0: INFO: Executing global job preload script 'C:\ProgramData\Thinkbox\Deadline10\workers\ADCDSB-01\plugins\67b72f150229a3b988f44f71\GlobalJobPreLoad.py'

2025-02-20 14:33:27: 0: PYTHON: *** GlobalJobPreload start ...

2025-02-20 14:33:27: 0: PYTHON: >>> Getting job ...

2025-02-20 14:33:27: 0: PYTHON: >>> Injecting Ayon environments ...

2025-02-20 14:33:27: 0: PYTHON: --- Ayon executable: C:\Program Files\Ynput\AYON 1.1.1\ayon_console.exe

2025-02-20 14:33:27: 0: PYTHON: >>> Temporary path: C:\Users\admin\AppData\Local\Temp\20250220133327458502_4427a3bf-ef8f-11ef-98ee-b7fa457786ec.json

2025-02-20 14:33:27: 0: PYTHON: Traceback (most recent call last):

2025-02-20 14:33:27: 0: PYTHON: File "C:\ProgramData\Thinkbox\Deadline10\workers\ADCDSB-01\plugins\67b72f150229a3b988f44f71\GlobalJobPreLoad.py", line 487, in inject_ayon_environment

2025-02-20 14:33:27: 0: PYTHON: raise RuntimeError((

2025-02-20 14:33:27: 0: PYTHON: RuntimeError: Missing required env vars: AVALON_PROJECT, AVALON_ASSET, AVALON_TASK, AVALON_APP_NAME

2025-02-20 14:33:27: 0: PYTHON: !!! Injection failed.

2025-02-20 14:33:28: 0: Done executing plugin command of type 'Start Job'

=======================================================

I am having an issue, that when I try to submit a Karma XPU job from Houdini Solaris, it errors because it doesn’t have the right variables.

I have check a similar topic on the forum that did not solve my issue.

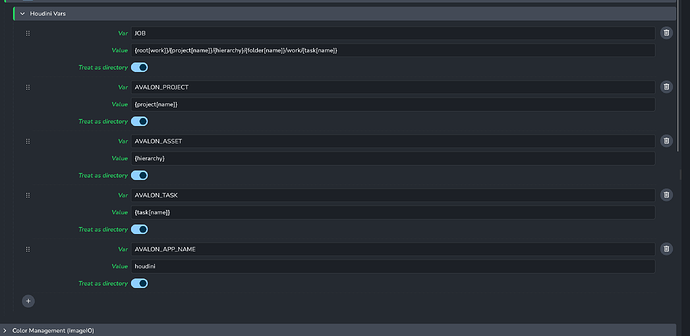

That thing I have tried was to add the var to the houdini plugin :

but it still errors, eventhough it now seems to load the variables correctly. Any idea or track I could follow?

Thanks!