Having a fast, reliable way of timeline assembly from current shot versions is crucial for judging continuity.

The process has to be as friction free as possible, easy and fast. Ideally automatic for producing timeline dailies.

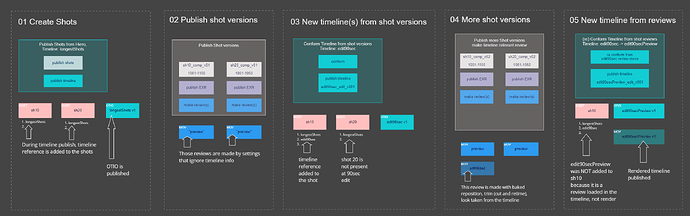

Playing the current state of edit

In better world, there would be no need to render a timeline; an OTIO compatible player would be able to show the current state of the timeline on the fly.

Publishing (rendering) current state of edit

Production reality often dictates the need for timeline render to some distribution format like H264.

Timeline render can be sped up considerably by using reviews generated during the shot publish, instead of full res published files.

There should be a way to assemble edit from versions (the high res) or their reviews.

Timeline relevant shot properties

The shot properties relevant for the timeline assembly are

- reposition (timeline resolution)

- shot colorspace and look (ideally in the timeline colorspace)

- shot edit (head and tail, possible speed change)

- letterbox and / or pillarbox

- maybe some metadata like timecode and reel-id to help the auto assembly (for hosts like Resolve)

Having a way to bake all the properties above to the review would not only allow the artist to judge the shot output relevant for each edit, but also make assembling edit as easy as splicing the reviews one after another. Plus it would be easy to have a per shot burnins in the timeline.

How and where to store shot properties relevant for timelines

Question is how to store the timeline/edit relevant info for shots. Is it better to have a reposition, edit (trim & retime), letterbox and colorspace / look subset for each timeline stored in edit, or maybe just somehow link edits to used shots, and pull the info from timelines? Most workflows are shot centric, but timeline / edits change frequently.