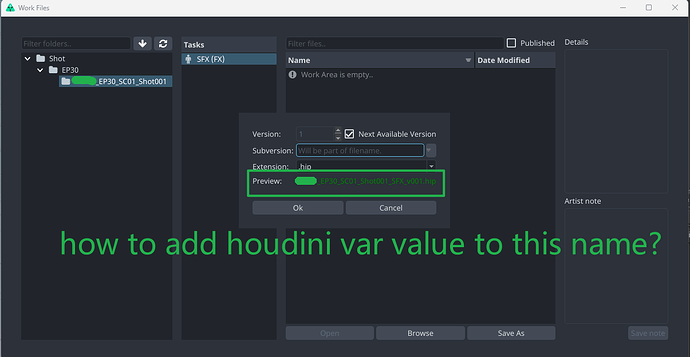

I want use frameStart and frameEnd in wokefile template,so i need add this task attributes to template key,how can do this?

This question was asked before somewhere on forums, discord or GH… I can’t remember… But, Here’s what I still remember:

One can wonder which frame range to add to the template keys that will be used later to generate the work-file name…

Should we use the frame range in the asset info on AYON server ?

Or, Should we use the actual frame range used inside the work-file ?

So, it’s not advisable to use frame start and end in file names as it’s not guaranteed to have the exact same frame range in the work-file. Therefore, we should use the work-file as the source of truth.

Maybe @BigRoy has a word on that.

Also for reference, I hit the same idea you described in your post but in a different situation when I was implementing Update Houdini Vars on context change | AYON Docs. Introduced in OpenPype PR #5651.

I ended up making the following function to add assetData to template keys.

assetData are computed from the current running DCC session.

I had tweaked your post title a little if you don’t mind.

Feel free to change the title if you think it’s not relevant.

Many Thanks,I will take a look at this!

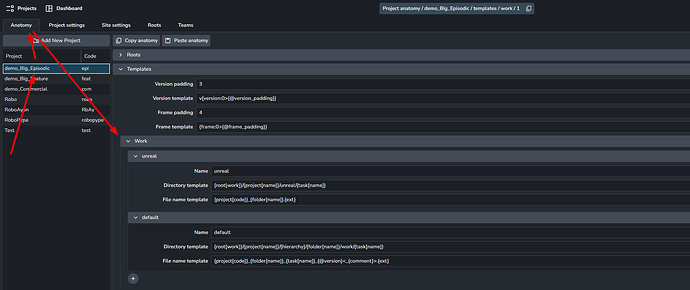

As far as I know, you can setup your work file name templates in project settings.

Find available template keys here.

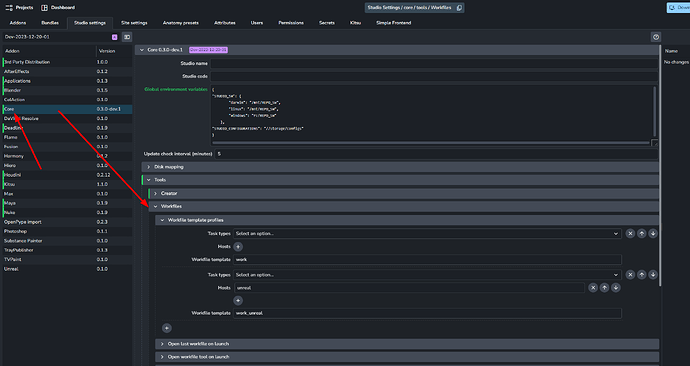

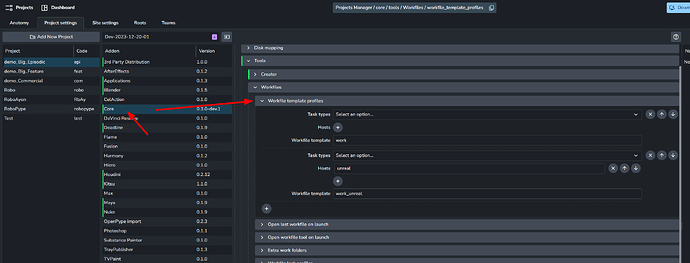

Then you can tell AYON to use your name templates in your studio settings or project settings.

| Studio Settings | Project Settings |

If I got you correctly, then the only way would be to add these specific houdini env vars to the available template keys here so that you can use them in your work file naming templates.

tbh, I’m not sure about how to technically implement such a feature.

Also, this should be discussed from pipeline management wise because allowing more keys can have negative effect as your work file name may tell false info about your work file. e.g. adding frame start and frame end to template keys can give false info if the work file contains different frame range.

Maybe @iLLiCiT can tell us more.

Thank you, now I understand! ![]()